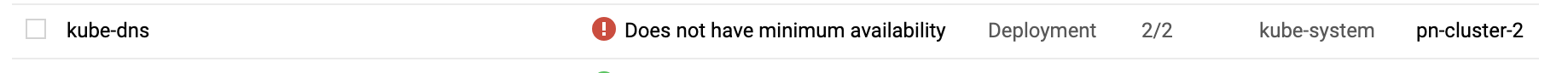

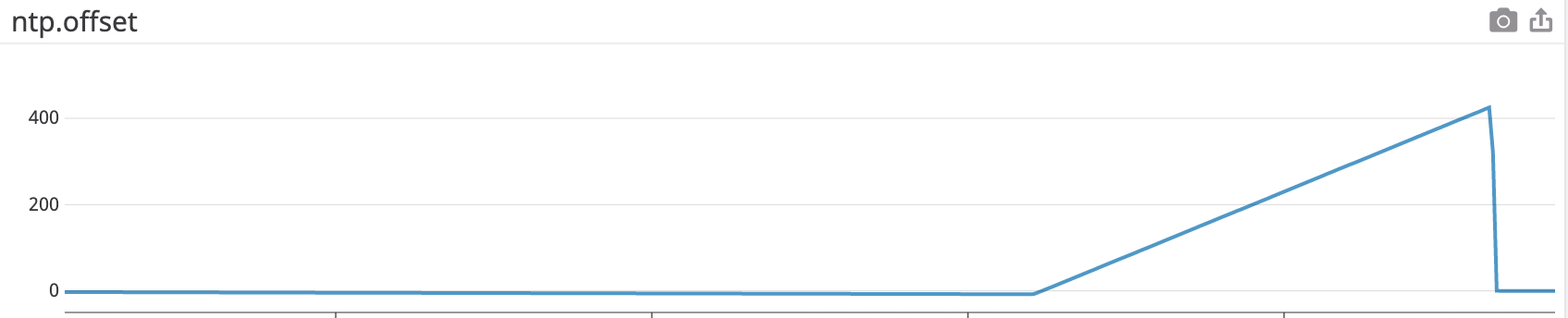

Name: kube-dns-76dbb796c5-9gljt

Namespace: kube-system

Priority: 2000000000

PriorityClassName: system-cluster-critical

Node: gke-pn-cluster-2-pn-pool-1-efba661f-pz54/10.146.0.2

Start Time: Sun, 05 May 2019 14:46:19 +0900

Labels: k8s-app=kube-dns

pod-template-hash=3286635271

Annotations: scheduler.alpha.kubernetes.io/critical-pod=

seccomp.security.alpha.kubernetes.io/pod=docker/default

Status: Running

IP: 10.4.0.134

Controlled By: ReplicaSet/kube-dns-76dbb796c5

Containers:

kubedns:

Container ID: docker://2e341ab157aee24b63d95eefb4da434c79306229055d135abf6b730708589d68

Image: k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.13

Image ID: docker-pullable://k8s.gcr.io/k8s-dns-kube-dns-amd64@sha256:618a82fa66cf0c75e4753369a6999032372be7308866fc9afb381789b1e5ad52

Ports: 10053/UDP, 10053/TCP, 10055/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

--domain=cluster.local.

--dns-port=10053

--config-dir=/kube-dns-config

--v=2

State: Running

Started: Sun, 05 May 2019 14:46:42 +0900

Ready: True

Restart Count: 0

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:10054/healthcheck/kubedns delay=60s timeout=5s period=10s

Readiness: http-get http://:8081/readiness delay=3s timeout=5s period=10s

Environment:

PROMETHEUS_PORT: 10055

Mounts:

/kube-dns-config from kube-dns-config (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-jdksn (ro)

dnsmasq:

Container ID: docker://5cc16055a401b91bd15ba6507c9f2b7b4e4b20647496746d978cb211e1a0555d

Image: k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.13

Image ID: docker-pullable://k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64@sha256:45df3e8e0c551bd0c79cdba48ae6677f817971dcbd1eeed7fd1f9a35118410e4

Ports: 53/UDP, 53/TCP

Host Ports: 0/UDP, 0/TCP

Args:

-v=2

-logtostderr

-configDir=/etc/k8s/dns/dnsmasq-nanny

-restartDnsmasq=true

--

-k

--cache-size=1000

--no-negcache

--log-facility=-

--server=/cluster.local/127.0.0.1#10053

--server=/in-addr.arpa/127.0.0.1#10053

--server=/ip6.arpa/127.0.0.1#10053

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 255

Started: Sun, 05 May 2019 15:28:15 +0900

Finished: Sun, 05 May 2019 15:28:16 +0900

Ready: False

Restart Count: 13

Requests:

cpu: 150m

memory: 20Mi

Liveness: http-get http://:10054/healthcheck/dnsmasq delay=60s timeout=5s period=10s

Environment: <none>

Mounts:

/etc/k8s/dns/dnsmasq-nanny from kube-dns-config (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-jdksn (ro)

sidecar:

Container ID: docker://f8c87600c704dd709d27c518d0b3ce20d944608be16f1436442970454715977a

Image: k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.13

Image ID: docker-pullable://k8s.gcr.io/k8s-dns-sidecar-amd64@sha256:cedc8fe2098dffc26d17f64061296b7aa54258a31513b6c52df271a98bb522b3

Port: 10054/TCP

Host Port: 0/TCP

Args:

--v=2

--logtostderr

--probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,SRV

--probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,SRV

State: Running

Started: Sun, 05 May 2019 14:46:50 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 10m

memory: 20Mi

Liveness: http-get http://:10054/metrics delay=60s timeout=5s period=10s

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-jdksn (ro)

prometheus-to-sd:

Container ID: docker://82d84415443253693be30368ed14555ff24ba21844ae607abf990c369008f70e

Image: k8s.gcr.io/prometheus-to-sd:v0.4.2

Image ID: docker-pullable://gcr.io/google-containers/prometheus-to-sd@sha256:aca8ef83a7fae83f1f8583e978dd4d1ff655b9f2ca0a76bda5edce6d8965bdf2

Port: <none>

Host Port: <none>

Command:

/monitor

--source=kubedns:http://localhost:10054?whitelisted=probe_kubedns_latency_ms,probe_kubedns_errors,dnsmasq_misses,dnsmasq_hits

--stackdriver-prefix=container.googleapis.com/internal/addons

--api-override=https://monitoring.googleapis.com/

--pod-id=$(POD_NAME)

--namespace-id=$(POD_NAMESPACE)

--v=2

State: Running

Started: Sun, 05 May 2019 14:46:51 +0900

Ready: True

Restart Count: 0

Environment:

POD_NAME: kube-dns-76dbb796c5-9gljt (v1:metadata.name)

POD_NAMESPACE: kube-system (v1:metadata.namespace)

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-dns-token-jdksn (ro)

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

Volumes:

kube-dns-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-dns

Optional: true

kube-dns-token-jdksn:

Type: Secret (a volume populated by a Secret)

SecretName: kube-dns-token-jdksn

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: CriticalAddonsOnly

node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 46m default-scheduler Successfully assigned kube-system/kube-dns-76dbb796c5-9gljt to gke-pn-cluster-2-pn-pool-1-efba661f-pz54

Normal SuccessfulMountVolume 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 MountVolume.SetUp succeeded for volume "kube-dns-config"

Normal SuccessfulMountVolume 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 MountVolume.SetUp succeeded for volume "kube-dns-token-jdksn"

Normal Pulling 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 pulling image "k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.13"

Normal Pulled 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Successfully pulled image "k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.13"

Normal Created 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Created container

Normal Started 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Started container

Normal Pulling 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 pulling image "k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.13"

Normal Pulled 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Successfully pulled image "k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.13"

Normal Pulling 46m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 pulling image "k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.13"

Normal Created 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Created container

Normal Pulled 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Successfully pulled image "k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.13"

Normal Pulling 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 pulling image "k8s.gcr.io/prometheus-to-sd:v0.4.2"

Normal Started 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Started container

Normal Created 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Created container

Normal Started 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Started container

Normal Pulled 45m kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Successfully pulled image "k8s.gcr.io/prometheus-to-sd:v0.4.2"

Normal Created 45m (x2 over 46m) kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Created container

Normal Started 45m (x2 over 46m) kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Started container

Normal Pulled 45m (x2 over 45m) kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Container image "k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.13" already present on machine

Warning BackOff 1m (x211 over 45m) kubelet, gke-pn-cluster-2-pn-pool-1-efba661f-pz54 Back-off restarting failed container

|